I have recently been thinking quite a bit about what make a piece of software successful. Very early in my career, I got to temper my idealistic view of computer science and the world in general, quite well captured by the saying “you don’t have to be the best to be the first”. As I progress in my professional journey, I have been trying to identify key aspects that make a software go head and shoulders above their competitors or see a exponential growth in a niche of the market not exploited at the time. While it is most likely impossible to find the 3 magic words you have to pronounce to make the perfect software appear, I am still going to pretend I did this, for web traffic purposes, because I have no integrity. All bad humor aside, and for the sake of readability, I did try to funnel my thinking into 3 major aspects which, combined, are a successful piece of software. Quick aside: the purpose of this piece is not to dive into what technical aspect of a piece of software is valuable, but rather to present the software features to which the market responds positively. With that out of the way, let me present you the current state of my cogitation: the perfect software is:

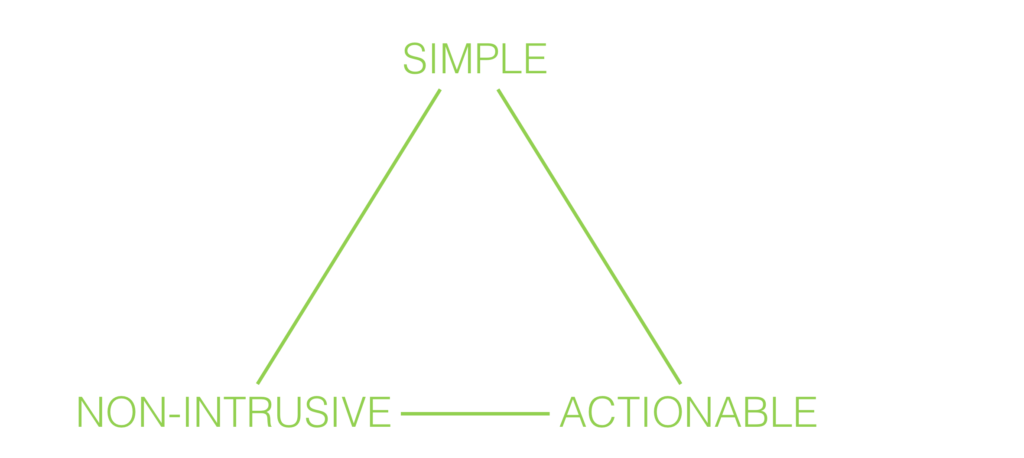

SIMPLE

Simplicity is essential for the end-user to open their eyes. While the algorithms, architecture and other under-the-hood building blocks can and will most likely be complex, the idea here is to present something that is simple to understand. Simplicity can be driven by multiple factors. It could be for instance the front end of your application. This is why UI/UX is such a sought-after skill, and while it is predominant in the B2C industry it is severely underused in the B2B world. It could also be driven by the product packaging. If building a platform with many potential uses, packaging them into specific solutions recognized by the industry is a fair way to achieve simplicity. It could also be targeted: focusing on solving the problems of one vertical for instance.

NON-INTRUSIVE

This characteristic is epitomized by the success of cloud computing. Software As A Service particularly is the perfect example of non-intrusiveness being a successful business model: end users do not want to have to install and maintain software. It is an obvious cost reduction feat for big enterprises, it is just as much a reality for consumers: no one wants to have to install a software on their computer, and the ones we do install are the ones we hate the most (he who had no complaint about Microsoft Word cast the first stone). Even more interesting, web software like appointment booking, ticket sales and so on are considered websites and not pieces of software, but I digress. That being said, and as I argued as early as last week, SaaS isn’t the only model and non-instrusiveness can be characterized by other traits. Backward compatibly or maintenance of current set of existing skills and application is a great way to ensure a non intrusive model. One of the reason why I think disruption is nonsense, see previous rant.

ACTIONABLE

Being simple and non-intrusive are essential qualities, but your software must actually do something in order to be valuable. The important question here is: what’s in it for your user? What is the value? And I don’t think that a value proposition such as “we are doing it better than the others” is enough, nor is “imagine what you could do with that”. You need to be able to be able to show tangible results right off the bat, drive your customer through a story of what they will be able to do now that they weren’t able to do before. This feature is in my opinion one of the hardest and most often forgotten feature for a software to possess, especially put in relation with the other two. Indeed, innovation while maintaining non-intrusiveness could be seen as an oxymoron. In reality, the perfect unique innovative actionable value that of which no one thought before does not exist. That being said, many tech companies today start by building technical prowesses instead of focusing on creating value. My recommendation is to think about the value first, then focus on making the solution simple and non-intrusive, which is ironically why this article is written in the opposite order.

Conclusion

Can a piece of software truly possess all these qualities fully? Probably not, but at I think it is at least an ideal to strive for. As mentioned awkwardly at the beginning of this article, this is also a very preliminary assessment of my thought process. I do believe that if a piece of software possess a good balance of theses 3 features, it is set for success. More importantly, I think that these features should drive the development of new softwares. I know that I have a few ideas about what to develop, and I’m going to make sure to keep that in mind.